Monday, 11 October 2004

[Last

Week]

[Monday] [Tuesday] [Wednesday]

[Thursday]

[Friday] [Saturday] [Sunday]

[Next Week]

[Daynotes Journal

Forums] [HardwareGuys.com

Forums] [TechnoMayhem.com

Forums]

{Five

Years

Ago Today]

08:44

- If you were among the fifty or so subscribers who signed up

for a review copy of Building the

Perfect PC,

you should be getting it and a t-shirt very soon, if you haven't

already. The sooner you can write a review of the book and post it to

Amazon, the better. (You can post it to other places such as B&N,

other bookseller sites, user group sites, etc. as well, although

Amazon.com is the really critical place to post it.)

A couple of people have asked me for guidelines about writing a

review. I don't want to influence anyone, so all I'll say is that you

should write however much or however little you wish about the book,

focusing on (a) what you thought of the book generally, (b) anything

you particularly liked or disliked, or thought was useful, or thought

could have been done better. Personal observations and anecdotes are

helpful. When I'm writing a review, I often find it helpful to read

through the reviews that have already been posted to help me remember

points I wanted to make, to take issue with comments in other reviews

with which I disagree, and so on.

But the really important thing is to get your review posted to

Amazon as quickly as you can. Initial momentum counts for a lot.

I spent some time yesterday playing with Nvu,

which is an enhanced version of Mozilla Composer, sponsored by

Linspire. Nvu isn't FrontPage, not by a long shot, but does add many

nice features that are not present in Composer, not least of which is

an ftp site management function.

Barbara has become quite frustrated with the primitive publishing

functions available with Composer, as have I. Perhaps the worst aspect

of publishing with Composer is that it focuses on one page and two

directories--the directory that contains the page itself and a single

directory that contains images for that page. If, for example, a page

contains images that are stored in two different directories, there is

no convenient way to publish the page and both images.

There is also a problem with publishing defaults. It seems to me

that it would be reasonable to store the publishing parameters with the

page, so that if you published the page repeatedly (as we do with our

journal pages) the publishing parameters would be stored the first time

the page was published and then used subsequently until they were

changed manually.

It doesn't work that way with Composer. It insists, for example, in

trying to publish Barbara's journal page to her researchsolutions.net

site rather than to her fritchman.com site. Each time she publishes her

journal page, she has to remember to change the site to which she is

publishing from the default researchsolutions.net to fritchman.com. If

she forgets, the result is not a pretty sight (or site). I spent some

time yesterday using the gFTP client to delete extraneous files

manually from Barbara's site (and mine).

There are other problems with the Composer Publish function. For

example, incredibly, it is incapable of creating a directory on the

server. I found this out by chance. Barbara was attempting to put

together a new page that documented her recent trip to Vermont. After

struggling mightily with Composer's problems with directories and so

on, I decided to create a new root-level folder on her site, named

/vermont-2004. I put the page itself, vermont-2004.html, into that

directory, along with all of her images, both the full-size versions

and the thumbnails I'd had to generate manually with Irfanview. When I

attempted to publish the page and associated images, Composer's Publish

function blew up with an unhelpful error message. As it turned out,

that was because the /vermont-2004 directory did not exist on the

server. I had to go in with Xandros File Manager and create that

directory manually before she could publish to it.

Speaking of which, when I mentioned that I was looking for a good

GUI ftp client for Xandros, several people mentioned that I could

simply enter the ftp address in the URL bar of Xandros File Manager.

That's true enough, but the problem is that XFM isn't much of an ftp

client. For example, when I attempted to use it to publish Barbara's

/vermont-2004 directory by copy/paste from the local drive to the ftp

site, XFM blew up repeatedly with a message about too many connections.

From the little I've used it, gFTP seems to be a very competent GUI

ftp client, so I'll probably use it in combination with Composer's or

Nvu's Publish function--the latter when I'm simply publishing my

journal page routinely, and the former when I have multiple files to

publish, particularly if they're located in other directories.

Incidentally, one other nice thing about Nvu is that it produces

nicely formatted HTML source. Composer produces hideous-looking source,

with excess carriage returns and <br> tags all over the place,

lines that scroll

horizontally for several screen widths, and so on. Nvu provides nicely

formatted, compact HTML code.

Nvu is available for download from Xandros Networks, but only for

Premium

subscribers, which I'm not. Because I only wanted to experiment with

Nvu, I simply downloaded the tarball for Linspire (which is also

Debian-based), stuck it in my junk directory, extracted it, and ran Nvu

by running the executable script in the Nvu directory. So, it's not

installed, but I can use it. If I decide to use it permanently, I'll

get a Xandros-packaged install file.

Here's something

incredible.

For those of you who don't read German, these articles report that,

beginning next April, Germans who have a home PC will be required to

pay about $20 per month to subsidize public broadcasting. The fee is

waived if they already pay a television license, so in essence this

means that those who have a PC but do not watch public TV will be

forced to pay for public TV anyway. Note that this is not a tax in the

usual sense. The German government will collect it on behalf of public

TV. I hope this doesn't give PBS any ideas...

I've railed on about such subsidies at length in the past. They do no

good, and in fact do much harm. They favor entrenched interests at the

expense of innovation. Your phone bill is a good example. For most of

us, only a small fraction of what we pay each month is actually paying

for our telephone service. Most of it is subsidies, both visible and

invisible, direct and indirect.

Jerry Pournelle, for example, recently noticed that he was paying

something like $8/month to AT&T on a phone line that is never used

to place any long-distance calls, indeed one that places no outgoing

calls at all. This "pay us whether or not you use our service" is a

relatively new phenomenon, but is growing fast. That's not the worst of

it, though.

The worst is all the subsidies we pay for so-called "universal access".

As I recall, a basic phone line in Winston-Salem is something like

$14/month (nominally, that is; the actual cost is much, much higher).

Of that $14, probably less than $5 (perhaps much less) goes to covering

the cost of providing a phone line within Winston-Salem. The rest is a

concealed subsidy that is spent on other things, such as providing

$14/month phone lines to people who live out in the sticks.

Out there, the actual cost of providing a phone line may be $50, $100,

or even $200/month, but rural residents pay only the standard $14,

leaving the rest of us to make up the difference. And what is really,

truly annoying is that if they want a second phone line, they pay only

$14/month for it as well. You'd think the first subsidized line would

have taken care of whatever supposed obligation there is to provide

"universal access" and that the phone company would charge them the

true cost for second and subsequent lines, but no.

The result of these subsidies, of course, is that there is no reason to

look for better methods. Why consider doing things a new way when it

costs only $14/month to have a phone line out in the middle of nowhere?

If there were no subsidies, people would be looking more cost-effective

ways of providing telephone service to rural users, such as building a

cellular or Wi-Fi network. Maintaining mile upon mile of copper wire is

insane when there are better, cheaper methods readily available. The

problem is, they aren't cheaper because the subsidies have artificially

reduced the apparent costs of doing things the old way.

And people are tiring of those subsidies, which is one of the main

reasons that Vonage and similar VoIP (Voice over IP) companies are

growing like crazy. There's a price war breaking out now. AT&T

reduced their all-you-can-eat VoIP plan to $29.95/month, so Vonage

dropped theirs to $24.95. That's still greatly in excess of the actual

costs involved, so there's a lot of room for them to drop prices

further still. And it's not just the telephone companies. Time-Warner

cable constantly runs ads soliciting our VoIP business. They put a

circular in every bill. It's a goldrush now, because their incremental

costs to provide the service are very small relative to the current

monthly rates for service. Eventually, over the next few years, the

cost of VoIP service will stabilize at something closer to the actual

costs involved. At that point, I expect we'll see VoIP service selling

for perhaps $5/month or less over the cost of our broadband

connections.

All you can eat, indeed. There's no real point to billing by the

minute, because the billing costs would make up the bulk of the bill.

VoIP providers can make more money by setting the charges for the

service slightly higher and not tracking usage. Nor is there any point

to billing by distance. IP doesn't care whether I'm talking to someone

across town or across the ocean. The real costs are the local loop,

whether that loop happens to be copper wire to the CO, a coax or

optical cable to the cable modem company, or a Wi-Fi wireless network.

Once your packets are outside the local loop and on the backbone, the

incremental cost to move one more packet is trivially small. When

Pournelle picks up the phone and calls me, for example, the vast

majority of the costs are in the few miles of local loop on his end and

the few more miles of local loop on my end. The thousands of miles

between Los Angeles and Winston-Salem account for a tiny, tiny fraction

of the cost.

What's interesting to me is that neither I nor most of my

technically-competent friends use VoIP. When Pournelle or Bilbrey wants

to call me, he picks up the phone and dials. At three cents a minute

(or whatever), who cares? The obvious moral here is that none of us

much cares about the cost of long distance. What may eventually

motivate all of us to move to VoIP is the monthly cost of local

service. That and the inexpensive or free features available with VoIP

service--automated attendant, voice mail, conferencing, caller ID, and

so on. Some of those services are also available with traditional

telephone service, but typically only if you're willing to pay a stiff

monthly fee.

My guess is that most of us will begin our VoIP experience by using

Skype or another free VoIP application that's designed primarily to

connect to others using the same software. That provides free long

distance, but does nothing about the monthly bill for local service.

It'll be interesting to see where things go from there. Video

conferencing, shared whiteboards, and so on would be logical

extensions. It's going to be an interesting next few years.

And some Linux apps do have some bugs...

Don't look for much around here over the next week or two. I have some

killer deadlines...

14:36

- I just sent the following message to Jerry Pournelle:

Honors colleges appear to be all the

rage...

<

http://www.cnn.com/2004/EDUCATION/10/11/honors.college.ap/index.html>

I wasn't aware of this phenomenon, but it appears to fit right into

what we've been discussing. Could this be the first step in the coming

bifurcation of higher education into "real" colleges, attended by real

students, and "pretend" colleges which are in business simply to take

money from so-called students and issue them credentials they haven't

earned? And indeed it may be one of the first steps toward a

bifurcation of society in general, into a tiny group who are truly

educated and a huge group who have only worthless, unearned credentials.

I haven't believed for years that any degree, including a Ph.D., from

any but a very few US colleges and universities is evidence of

anything. Oh, a hard-science or engineering degree from MIT, Cal Tech,

Duke, or a few other schools still means something, but that's about

it. Even the MD has been watered down by reduced admission requirements

intended to encourage diversity, whatever that means.

I wonder if schools are finally beginning to realize that they've lost

all credibility and are attempting to establish new, meaningful brand

names for themselves. I hope so.

Which is all true, but not Politically Correct. If someone tells me he

has a degree in Chemistry, Physics, Engineering, or another rigorous

discipline from, say, Winston-Salem State University, that means

absolutely nothing to me. I don't even take it as evidence that the

person can read, literally. And if someone tells me he has a degree in

a non-rigorous discipline from any school, I take that as an indication

that he has no education at all. Unfortunately, I'll be right more

often than I'm wrong.

[Top]

Tuesday, 12 October 2004

[Last

Week]

[Monday] [Tuesday] [Wednesday]

[Thursday] [Friday] [Saturday]

[Sunday] [Next Week]

[Daynotes Journal

Forums] [HardwareGuys.com

Forums] [TechnoMayhem.com

Forums]

{Five

Years

Ago Today]

09:53

-

Pournelle sometimes asks his readers what additional capabilities they

would like computers to have. Invariably, his readers say they want

things that would require processors and other components several

orders of magnitude more powerful than we have now. Let's face it, the

fastest AMD or Intel desktop computer doesn't have the IQ of a

cockroach.

But I thought about something yesterday I'd really like to see, and

it's within the capabilities of current systems. I want intelligent

agents based on Operations Research methods to do my bidding. For

example, say I've just discovered an

author I really like. I want to buy all of her books, but most are out

of print. That means going to someplace like ABE Books and searching

down titles one by one. If she's published a dozen books, that means

finding all twelve individually. If I want a reading copy and a

collection copy of each, that's 24 separate searches, and probably 24

separate orders.

With my OR-based agent, I could simply enter various parameters and

have the agent do all the work. For example, I may want

hardback reading copies of all her books, but I may also want signed

first state (first edition/first printing) copies in Fine/Fine

or better condition, signed by the author. I want all 24 books at the

lowest overall cost, including shipping.

If I try to do that manually at ABE

Books, I have to do 24 separate searches, two for each title, because

the books listed in the ABE

Books database are actually in inventory at about 12,500

bookstores throughout the world. So, I can go through book-by-book and

find the best price, counting the

price of the book and shipping costs, for each book individually, but

not for the two dozen I want to buy as a group.

Because shipping can add

significantly to the cost, buying each individual book from the seller

with the apparent lowest price for it is often not optimal. My OR-based

agent would optimize for lowest cost, assuming that solution also met

the other constraints. For example, I might really want one particular

reading copy quickly. I may have already read the first four books in

the series, for example, and decided on that basis that I want reading

and collection copies of all of them. But my library doesn't have books

five, seven, and eleven in the series, so if I want to continue reading

the series in sequence I need book five (and possibly seven) quickly.

So I tell my OR-based agent that I want priority on delivering the

reading copy of book five (and possibly seven). It goes back and checks

the additional costs for expediting shipping from all the sources (not

just the ones it decided on initially) and tells me that it can have

books five and seven to me in two days, but at an extra cost of $12. It

also suggests that if I'm willing to wait three days, I can have books

five and seven in that time for only $3 more than the optimum solution

it calculated based on price. Alternatively, I can have book five in

two days for only $6 additional if I'm willing to wait four to five

days for book seven. And so on.

So I accept the second alternative proposed solution and tell my agent

to print me out a list of the proposed orders. But wait. In scanning

over the proposed solution, I see that my OR-based agent proposes to

order one of the collection copies from a bookseller that I've done

business with in the past and found to be unreliable. So I tell the

agent to remove that bookseller from consideration and recalculate.

The new solution increases the total price by only a few bucks, so I

tell my OR-based agent to go ahead and generate the orders. The final

solution orders the 24 books from 14 different bookstores. My agent

goes to the inventory database of each of the 14 stores and verifies

that the books are still available. It finds that 23 of them are in

fact in stock, but one has been sold and the database not yet updated.

So my agent re-runs against this new information and pops up a message

to tell me the solution I accepted is unworkable. The new proposed

solution is acceptable to me, although it involves 15 orders rather

than 14, so I tell my agent to go ahead and place the orders. It again

verifies in-stock status for each of the 24 books (this time at some

different stores) and finds that all are in stock. As it verifies each

book, it places a hold on it, but does not yet complete the order.

Once it has holds on all 24 books, it generates actual orders for all

24 books, sending the orders directly to the bookstores and notifying

me as each order is placed. My agent then uses the UPS and FedEx

tracking numbers provided to it by the bookstores to monitor delivery

status and to keep me informed of what's showing up when.

This is one of the "new" things I'd like my computers to do for me. And

it can all be done using existing technology.

12:00

-

A few years ago, I'd written Novell off. Although I was one of the

first to earn an Enterprise CNE (and, later, Master CNE) in North

Carolina, I'd let my certifications expire. There seemed to be no point

to keeping them. Novell seemed to be a stodgy old technology firm that

was stuck in the past, depending more every year on their installed

NetWare base to generate their revenues, which were declining year by

year. Almost no new NetWare installations were occurring, other than

those into established NetWare shops.

But, with their move from Utah to Cambridge and under the

stewardship of Jack Messman, Novell has succeeded in redefining itself

to become one of the most innovative and lively technology firms out

there. They've rebuilt their core business around Linux and open source

software, and in the process they've gone from being a ho-hum also-ran

technology company to one of the most important companies in the field.

Unlike some companies that talk the open-source talk but don't walk the

walk, Novell (along with IBM) really "gets" open source.

And, like IBM, Novell is willing to put its money where its mouth

is. After the failure of several previous attempts to kill Linux,

including the SCO debacle, Microsoft appears to shifting their strategy

towards using patents to strangle Linux. On the face of it, this

appears to be a good strategy. After all, Linux source code is freely

available to anyone, including Microsoft, so it's easy enough to search

Linux for patent violations.

But I think Microsoft's patent offensive against Linux is doomed to

fail, for the simple reason that they're bringing a knife to a

gunfight. Microsoft's patent portfolio is truly pathetic when compared

against IBM's patent portfolio. IBM, don't forget, is a staunch

defender of Linux and open source software. IBM has undertaken not to

attempt to enforce any of its patents against Linux, and it is a small

step from there to actually using its patents to defend Linux against

Microsoft and its stooges. If Microsoft goes up against IBM in a patent

war, Microsoft loses. It's as simple as that.

And now Novell

has weighed in,

promising to use its own substantial patent portfolio to defend Linux

against all comers, and that most specifically includes Microsoft.

People who worry that Microsoft will succeed in using its massive

resources to kill Linux and OSS invariably look upon Microsoft as the

big bully and Linux as the skinny little kid who wears glasses. What

they forget is that Godzilla and now King Kong are in Linux's corner.

If the big bully isn't careful, he may end up as a grease spot on the

playground.

[Top]

Wednesday, 13 October 2004

[Last

Week]

[Monday] [Tuesday]

[Wednesday] [Thursday] [Friday]

[Saturday]

[Sunday] [Next Week]

[Daynotes Journal

Forums] [HardwareGuys.com

Forums] [TechnoMayhem.com

Forums]

{Five

Years Ago Today]

8:17

- Heh. I got email from one of my editors at O'Reilly last

night. He was

doing the copy edit on the second part of the Building the Perfect Bleeding-Edge PC

article, and he had a few questions. One of them had to do with the

name of a tool I mentioned in the article.

I called the tool "dykes", and the editor nearly had a heart attack. I

explained that this is what the tool is universally called by techs and

that, although I was sure there was a proper name for the tool, I

didn't remember what that might be. So I checked Sears and a couple

of other sources. The formal name is probably just as bad. They're

called "end

nippers" or, worse still, "butt nippers".

I suspect "dykes" originated as shorthand for "diagonal cutters", but

that's no longer what most people use it to mean. The techs I've known

call diagonal

cutters "diagonal cutters" or simply "cutters". When they say "dyke"

they invariably mean the flat-nosed plier that is used to cut

flush/end/butt terminations.

So, "dykes" they remain, despite the fact that my editor was concerned

about using a word that is also slang for lesbians. Which reminds me of

something that happened when I was at RIT, lo these many years ago.

Three of us were meeting up to go to some event or other--Dave, Ian

(who was British), and me. I spotted Ian in the lobby, but Dave was

nowhere to be seen. The event was about to start, so I asked Ian, "Have

you seen Dave?" His answer was classic, "He's in the gents, sucking a

fag."

11:05

- One thing I really need is a good crystal ball. I'm struggling

right now to write the Quick Reference that will appear on the inside

covers of the Pocket Guide. Here's a message I just sent to my editor

at O'Reilly:

I've decided just to stub out the video

adapter section. There's no point to me wasting time on it now, because

everything is up in the air. ATi and nVIDIA are both in the midst of a

huge transition to new-generation chipsets. I have no idea what the

pricing will be on nearly all of the new cards, which makes it

impossible to suggest head-to-head competitors. It's not even clear

which adapters will be PCI-Express only versus PCI-X plus AGP.

The number of competing chipsets (and therefore adapter models) is also

staggering. Just nVIDIA now has the 6200, 6600, 6600 GT, 6600 Ultra,

6800, 6800 GT, and 6800 Ultra. nVIDIA just announced the 6200 Monday,

on no notice, and I expect they'll also announce 6200 GT and 6200 Ultra

versions at some point. ATi is even worse. And that's only their new

chipsets. Their older chipsets will remain in use, at least for a

while. And some of those older chipsets aren't very old at all. For

example, the ATi X600, X600 Pro, and X600 XT are now dead meat, in the

process of being replaced by the X700 series.

Even the tech people at ATi and nVIDIA don't really know what's going

on. Talk about a Chinese fire drill. Tom's Hardware is so disgusted

with the whole thing that they just

posted a

rant, with which I agree completely.

Anand, reviewing the nVIDIA 6200, concludes:

"If all of the cards in this review

actually stick to their MSRPs, then the clear suggestion would be the

$149 ATI Radeon X700. In every single game outside of Doom 3, the X700

does extremely well, putting even the GeForce 6600 to shame; and in

Doom 3, the card holds its own with the 6600. Unfortunately, with the

X700 still not out on the streets, it's tough to say what sort of

prices it will command. For example, the GeForce 6600 is supposed to

have a street price of $149, but currently, it's selling for closer to

$170. So, as the pricing changes, so does our recommendation.

In most cases, the GeForce 6200 does significantly outperform the X300

and X600 Pro, its target competitors from ATI. The X300 is priced

significantly lower than the 6200's $129 - $149 range, so it should be

outperformed by the 6200 and it is. The X600 Pro is a bit more

price-competitive with the GeForce 6200, despite offering equal and

even greater performance in certain cases.

However, we end up back at square one. In order for the 6200 to be

truly successful, it needs to either hit well below its $129 - $149

price range, or ATI's X700 needs to be much more expensive than $149.

In the latter case, if the card is out of your budget, then the 6200 is

a reasonable option, but in the former case, you can't beat the X700.

Given that neither one of the cards we're debating about right now are

even out, anything said right now would be pure speculation. But keep

an eye on the retailers. When these cards do hit the streets, you

should know what the right decision should be."

Of course, the whole ATi X600 series is now dead meat. This is simply

nuts.

[Top]

Thursday,

14 October 2004

[Last

Week]

[Monday] [Tuesday]

[Wednesday] [Thursday] [Friday]

[Saturday]

[Sunday] [Next Week]

[Daynotes Journal

Forums] [HardwareGuys.com

Forums] [TechnoMayhem.com

Forums]

{Five

Years

Ago Today]

0740

- FedEx showed up yesterday with a package from AMD with a

couple of Sempron processors (Socket A and Socket 754) and a Socket 939

Athlon 64 3500+. I hadn't been able to recommend the Athlon 64

previously because of the dearth of good motherboards available for it,

but that may change now that nVIDIA-based Athlon 64 motherboards are

beginning to appear in quantity.

One of the nice things about the AMD processors is that they consume

less power than Intel processors. That translates to less heat, quieter

fans, and a quieter system overall. I plan to build a Sempron system

with the Antec Phantom fanless power supply. With a Zalman CPU cooler,

that system should be nearly silent.

I really dislike Adobe Acrobat. I suppose it has its place for printed

documents, but for web presentation it is an abomination. Nothing like

having to scroll down one column, up, down the next column, up, down

the next, and so on ad infinitum. But, although I dislike Acrobat on

general principles, I really dislike the Linux implementation of

Acrobat Reader. Once it's fired up, it bonds itself to the Mozilla

Browser, and the only way to get rid of it is to close all instances of

Mozilla. Often, it's not convenient for me to do that, but there's

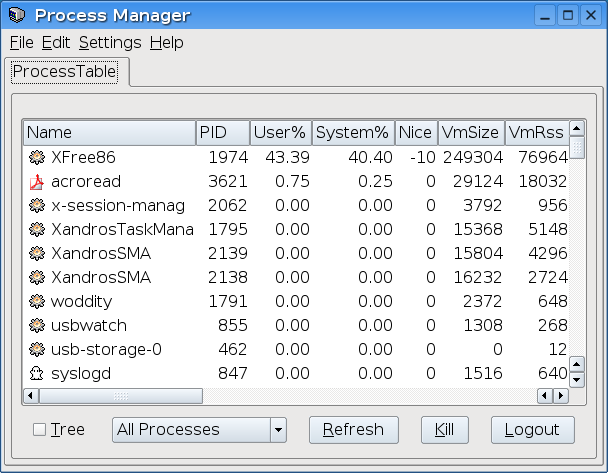

little choice. Acrobat, you see, also messes with XFree86. This screen

shot shows XFree86 at over 40% utilization, which is enough to make the

system slow to a crawl.

During a typical workday, I might have ten or more instances of Mozilla

open, with an average of perhaps half a dozen tabs each. If I

accidentally click on a PDF link, I'm screwed. As soon as Acrobat

Reader fires up, I'm doomed to have my system slow to a crawl. Not

immediately, but within a few minutes or perhaps an hour. It's bad

enough that I've seriously considered removing Acrobat Reader from my

systems and just using the built-in ability of Xandros File Manager to

display PDF files.

Which brings up another Xandros issue. The screensaver is flaky. It

stops working for no apparent reason. My main office desktop, which I'm

typing this on, runs Xandros 2.0. It's been installed for more than

three months now, and the screensaver continues to work as expected.

But this is the only Xandros system in the house that has a functioning

Xandros screensaver. Until a week ago, Barbara's Xandros 2.5 system had

a functioning screensaver, but it stopped working for no apparent

reason.

There is an easy solution. I open a run dialog, type xscreensaver-demo, and press

Enter. That screensaver works reliably and in fact has many more

features than the KDE screensaver that Xandros uses by default. I don't

know why Xandros doesn't enable it by default. As I have it set up on

Barbara's system right now, it will only work until the system is

rebooted. But I seem to remember down near the bottom of the man page

for xscreensaver-demo it tells me what I need to do to load it at boot

time.

I'm using N|vu to write this page.

It's available from Xandros Networks, but only if you're a premium

subscriber. I'd played around with N|vu a bit a couple of days ago

before I got the official Xandros version. I simply visited the N|vu

site and downloaded the package for SuSE. Double-clicking on the file

fired up Xandros Networks and extracted the package. It didn't install

it in the sense of adding it to the apt database or putting it on the

menu, but I was able to run N|vu by executing a script in the directory

I'd extracted it to. That was enough to convince me it was worth a

closer look, so I emailed my contact at Xandros to ask for a code to

access the premium site. They sent that to me within minutes, and I had

N|vu up and running minutes after that.

On the web site, N|vu claims

"Finally! A complete Web

Authoring System for Linux Desktop users as well as Microsoft Windows

users to rival programs like FrontPage and Dreamweaver."

which is, to put it kindly, a bit optimistic. I've never done more with

DreamWeaver than load it and spend a few minutes playing with it, but I

have used FrontPage for years, at first FrontPage 98 and later

FrontPage 2000. As far as I can see, albeit with admittedly little

experience as yet with N|vu, N|vu rivals FrontPage in the same way that

a Yugo rivals the Queen Elizabeth II.

Well, perhaps that's a bit harsh, but, as far as I can see, N|vu is

still just an HTML page editor. They have what they call the "Site

Manager" but unless I'm missing a lot it's not a site manager in

anything like the same way that FrontPage is a site manager. I'll play

with N|vu a lot more. Perhaps my initial impressions are wrong. And, of

course, like a lot of OSS software, N|vu is being actively developed.

[Top]

Friday,

15 October

2004

[Last

Week]

[Monday] [Tuesday]

[Wednesday] [Thursday]

[Friday]

[Saturday] [Sunday] [Next

Week]

[Daynotes Journal

Forums] [HardwareGuys.com

Forums] [TechnoMayhem.com

Forums]

{Five

Years

Ago Today]

08:53

- Was John Kerry dishonorably discharged? This guy

asks some questions that need to be answered. Although Mr. Kerry

introduced military service as a part of the campaign and has made much

of contrasting his service in Viet Nam with Mr. Bush's service in the

National Guard, Mr. Kerry has so far refused to allow all of his own

military records to be released.

Why has he not allowed those records to be released, if he has nothing

to hide? If there is no truth to the accusation, it would be easy

enough to refute. The fact that Mr. Kerry refuses to refute the

accusation is good reason to believe that he cannot refute it.

15:31

- I read two articles in The Inquirer today (Part 1 and Part 2) that

discuss the problems Intel faces over the next couple of years. The

author nailed the situation exactly. Intel is in deep trouble, as

evidenced by their recent cancellation of the 4 GHz Pentium 4. They

expected the Prescott core and its follow-ons to scale to 10 GHz or

more. They were wrong. At only 4 GHz, the Prescott is out of headroom,

leaving Intel with nothing to counter the onslaught of new AMD

processors.

AMD, which not all that long ago looked to be down for the count, is

now in the catbird seat. They made some huge gambles, notably their SOI

(silicon on insulator) process. Those gambles have paid off, and now

AMD can easily trump the best Intel has to offer. The pendulum has once

again swung, and it's AMD's turn to deal from a position of strength.

I am reminded of the Who.

"The parting on the left is now a

parting on the right,

and the beards have all grown longer overnight..."

If I created my own dictionary, the AMD and Intel logos would appear

next to the word "competition", because these two companies embody the

meaning of the word. They are ferocious competitors, but both compete

honestly and above-board. They let their products speak for themselves,

unlike a large company I could name that thinks nothing of using even

the most underhanded tactics.

Although I'm sure AMD and Intel both wish the other company would

simply disappear, the fact is that their competition has been good for

everyone. Obviously, it has been good for consumers. If it weren't for

AMD, the fastest processors Intel sells would probably be stuck at well

under a gigahertz, and we'd pay $1,000 for the "fast" models. It's been

good for Microsoft, which can continue to introduce ever more bloated

operating systems and applications. Less obviously, it's been good for

AMD and Intel themselves, who might have otherwise rested on their

respective laurels.

The current situation is perilous for Intel, obviously, but never count

Intel out. They have massive resources, and they employ a lot of very

smart engineers. Less obviously, the current situation is perilous for

AMD. AMD has numerous weaknesses, not least their limited fab capacity.

If Intel literally disappeared tomorrow, leaving the entire market to

AMD, AMD could meet only 30% of the current demand for processors. AMD

is also famous for failing to execute, and they face several

challenges, including their move to a 65 nanometer process. If AMD is

not very careful, they could stumble. And a small stumble might suffice

to allow Intel to come roaring back.

The major problem AMD has always had is their apparent lack of a killer

instinct. Right now, when they have Intel down, is the time to pour it

on. AMD should be shipping faster and faster processors, as quickly as

they can get them out the door. Instead, when it has the advantage, AMD

has historically been satisfied to beat Intel only by a bit. I'm sure

AMD's MBAs are telling them that they leave money on the table by

shipping faster processors than they need to. That argument is

superficially attractive, but the fact is that AMD is Avis to Intel's

Hertz, and AMD has to not just beat Intel, but slaughter them.

If Intel struggles mightily to deliver a 3.8 GHz Pentium 4 that is

available only in small quantities, AMD shouldn't match Intel's best

effort with an Athlon 64 3800+ or even 4000+ of their own, priced at

about the same level as Intel's flagship processor. AMD should instead

flood the market with cheap Athlon 64 4000+, 4500+, and even 5000+

processors.

Some might argue that AMD's limited fab capacity means that strategy

would indeed leave large amounts of money on the table, but I think

they're missing the point. AMD's goal should be to develop and nurture

the perception in consumers' minds that AMD processors are first-rate

and that Intel is an also-ran. The way to do that is to kick Intel when

it's down.

AMD has problems of its own, of course, many of which can be traced to

its small size and limited resources compared to Intel and its

consequent lack of vertical integration. In concentrating all of its

efforts on producing a competitive processor, AMD has failed to devote

resources to match Intel's twin trump cards, chipsets and motherboards.

For a long time, that hampered the success of AMD processors. No matter

how good the processor, no one with sense will use it if that means

using a motherboard built around a third-rate chipset from VIA, SiS, or

ALi.

Fortunately for AMD, nVIDIA came to their rescue with the superb

nForce3-series chipsets for AMD processors. Also fortunately for AMD,

motherboards from quality manufacturers like ASUS and built on recent

nForce3 chipsets are becoming widely available. In terms of stability

and compatibility, there is now little to choose between a Pentium 4

running on an Intel motherboard and an Athlon 64 running in an nForce3

motherboard.

My next project systems will be built around AMD processors. I'll be

building three systems, one with a Socket A Sempron 2800+, one with a

Socket 754 Sempron 3100+, and one with a Socket 939 Athlon 64 3500+.

These are long-term project systems that I will use heavily and

evaluate based on that real-world use. You'll hear lots more from me

about them over the coming months. But my initial belief is that these

current-generation AMD systems will present a very strong challenge to

Intel's Pentium 4 hegemony.

[Top]

Saturday,

16 October 2004

[Last

Week]

[Monday] [Tuesday]

[Wednesday] [Thursday]

[Friday]

[Saturday] [Sunday] [Next

Week]

[Daynotes Journal

Forums] [HardwareGuys.com

Forums] [TechnoMayhem.com

Forums]

{Five

Years

Ago Today]

[Top]

Sunday,

17 October 2004

[Last

Week]

[Monday] [Tuesday]

[Wednesday] [Thursday]

[Friday]

[Saturday] [Sunday] [Next

Week]

[Daynotes Journal

Forums] [HardwareGuys.com

Forums] [TechnoMayhem.com

Forums]

{Five

Years

Ago Today]

[Top]

Copyright

© 1998, 1999, 2000, 2001, 2002, 2003, 2004 by Robert Bruce

Thompson. All Rights Reserved.